[1]:

import hmclab

from hmclab.Samplers import HMC

import numpy

Separate priors per dimension#

Sometimes the parameters that we try to infer are sufficiently different such that we can’t describe our prior knowledge using only one type of distribution. For example, trying to find an Earthquake hypocenter and origin time might mean that we have a Gaussian prior on its depth, but no clear preference of the origin time (as an Earthquake might of course occur anytime, before the observed arrival times at least).

The HMCLab package has a simple interface for doing this: 1. We create the priors for all relevant parameter groups separately; 2. We combine these distributions using hmclab.Distributions.CompositeDistribution. This leaves the order in which the distributions intact; 3. We use the resulting distribution as a prior or directly sample on it, i.e. what we would normally do with a prior.

Below we illustrate the process.

Create prior 1, a Normal distribution on one dimension.

[2]:

prior_1 = hmclab.Distributions.Normal(

means=numpy.ones((1, 1)),

covariance=4 * numpy.ones((1, 1)),

)

Create prior 2, a two dimensional Laplace distribution with zero mean and unit dispersion.

[3]:

prior_2 = hmclab.Distributions.Laplace(

means=numpy.zeros((2, 1)), dispersions=numpy.ones((2, 1))

)

Combine the priors

[4]:

prior = hmclab.Distributions.CompositeDistribution([prior_1, prior_2])

Note: Throughout this notebook, we’ll sample various distributions. Tuning settings are updated accordingly, but not discussed. Keep this in mind when playing around with the notebook; you might need to update parameters, primarily step lengths.

Note:

When combining e.g. distribution on parameter 1 and parameter 2 in this way, the function CompositeDistribution returns the following mathematical construct:

where the various \(\chi\)’s represent negative logarithms of probabilities (misfit functions).

The gradient of the constructed misfit function is subsequently defined as:

This is constructed automatically.

Create the sampler object and sample using a unit mass matrix.

[5]:

filename = "bin_samples/tutorial_2_composite.h5"

HMC().sample(

filename,

prior,

mass_matrix=hmclab.MassMatrices.Unit(prior.dimensions),

proposals=10000,

stepsize=0.5,

overwrite_existing_file=True,

)

[5]:

Visualize the resulting samples.

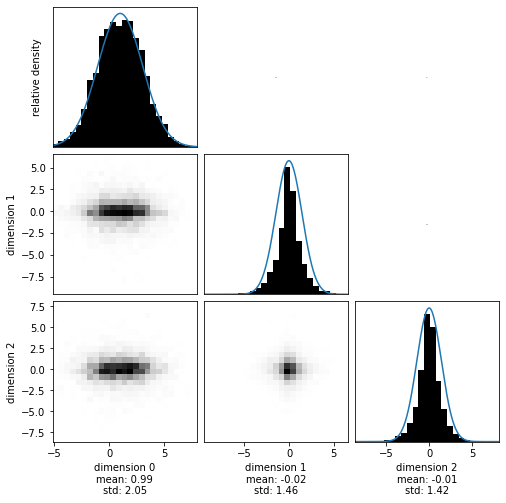

[6]:

with hmclab.Samples(filename) as samples:

hmclab.Visualization.marginal_grid(samples, [0, 1, 2], show=True)

Perfect! The posterior has (almost to machine precision) zero correlation. This si exactly what we expect, as we haven’t introduced any in our prior. Additionally, the marginals perfectly capture the different distributions.

Try to get a feeling of convergence:

[7]:

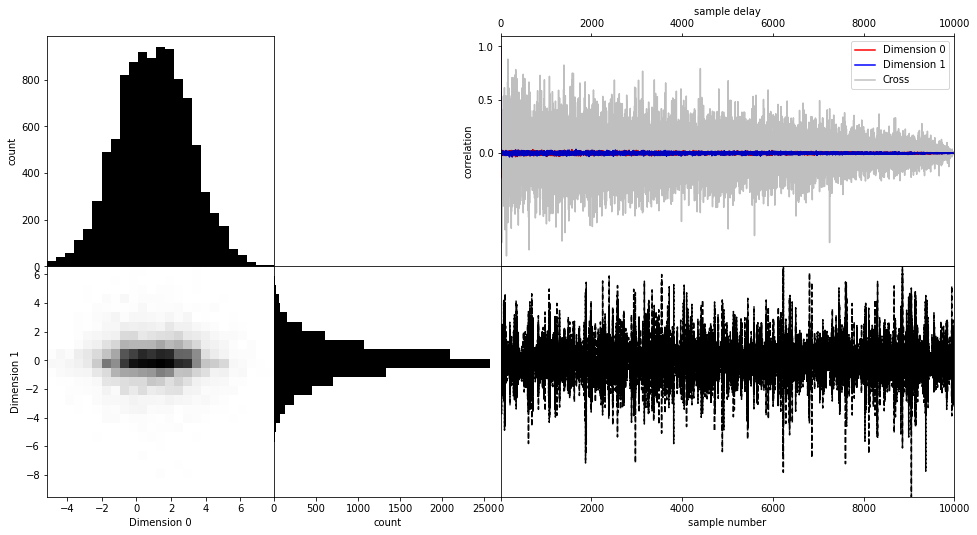

with hmclab.Samples(filename) as samples:

hmclab.Visualization.visualize_2_dimensions(samples)